Comprehensive Guide to Optimizing WordPress Robots.txt

Optimizing WordPress Robots.txt is a must know how job for online success. To index your website swiftly, better ranking, and optimize for SEO, you need to plan and build a technical robots.txt file for WordPress. It becomes crucial, especially when you have to make use of remarkable influential SEO tools. The Robots.txt file illustrates how to configure and scan your index site. Check the complete article to know how you can optimize WordPress Robots.txt for SEO.

There are approximately 1.5 billion websites globally. However, less than 200 million websites are in active mode. Many websites are lost in the competitive online world for various reasons, including optimizing WordPress Robots.txt files for search engine optimization. If you want to keep your website SEO friendly, it becomes essential for you to focus on optimizing the website. Optimizing WordPress Robots.txt is so important.

Image: (Source)

Have you ever optimized your WordPress Robots.txt file for SEO? If the answer is negative, you will lose the chance to get listed in the search engine. Robots.txt file plays a crucial role in SEO.

If you have a WordPress-based solution, then the file is automatically created. Having this file is like having everything within your access but ensuring that the file is optimized to leverage competitive advantages for your brand. Before digging deep into the topic, let’s understand what Robots.txt is? How is it useful? And how can you optimize it?

What is Robots.txt? Why is it Essential for Better SEO?

The file that lets search engine bots know which page needs to be crawled and not is termed Robots.txt. It’s a crucial part of the robots exclusion protocol, a group of network criteria that observe how robots crawl the website, read the content, and provide information in front of your users.

When any search bots come to your site, it renders the robots.txt file and catches the guidance. However, the absence of bots can make search engines stop indexing your branded solution on the network.

Robots exclusion protocol includes directives such as meta robots, directories, and much more. It helps search engines determine how each link has to be treated; whether it must be considered “do follow” or “nofollow.” In simple words, bots help search engines find whether specific users agents can or can’t crawl specific website sections. The instructions are specified by “disallowing” or “allowing” specific user agents.

Format of Robots.txt File:

User-agent: *

Disallow: /

Code: (Source)

User-agent: [Name of user agent]

Disallow: [Instructing not to crawl]

Both the lines combine to make a robots.txt file. A single file can consist of multiple user agents and other information, including instructions like allow, disallow, etc. Each component is defined as a discrete set in the robots.txt file and is separated by a line break.

Example of Simple Robost.txt File:

User-agent: Googlebot

Disallow: /nogooglebot/

User-agent: *

Allow: /

Sitemap: http://www.example.com/sitemap.xml

Code: (Source)

Example for Blocking Googlebot:

User-agent: Googlebot

Disallow: /

# Example 2: Block Googlebot as well as Adsbot

User-agent: Googlebot

User-agent: AdsBot-Google

Disallow: /

# Example 3: Block all but AdsBot crawlers

User-agent: *

Disallow: /

Code: (Source)

Robots.txt Syntax You Can Use

The syntax can be considered as an interaction medium for the robots.txt files. There are few well-known definitions that you can come across when you dive deep to know about the robots.txt files. These includes:

User-agent

User-agent helps you to give instructions to Google bots to crawl and index your branded solution. You can easily find all essential information related to users here.

Disallow

It helps to instruct the user agents not to crawl a particular address. A single “Disallow” line is only allowed into each URL.

Allow

Allow enables you to instruct Googlebot to access a page even though its parent page. It might be acknowledged as disallowed.

Sitemap

Sitemap command is used to call XML sitemap(s) that are correlated with the URL. The command is supported by most of the browsers, including Google and others as well.

Does Your WordPress Website Need Robots.txt file?

Yes, of course, your website needs a robots.txt file. If you avoid it, the search engines will find it challenging to decide which pages or folders need to be crawled and which do not. When you launch a WordPress blog, then it won’t matter. But if you want to control how your site is crawled and indexed, it becomes essential for you to pay attention to the robots.txt file.

Each search bot has a crawl quota; it means that they will mean that the bot will crawl a few of the pages during the session. If it finds difficulty in the crawling process, then the bot will continue to process. The delay in the process can affect the loading speed of your branded solution on the web.

For example: if you operate a ride-hailing business online and want to rank in the top position on the search engine, then it becomes essential for you to optimize your page. Also, make it SEO-friendly so that bots find it easier to crawl and index your ride-hailing brand in the search engine.

You can fix the issue by disallowing search bots from crawling unnecessary pages. By eliminating unnecessary page crawl, you can save a great time. It helps you to index your business website pages more speedily than ever before.

One more purpose of practicing the Robots.txt file is to restrict search engines from indexing pages. It’s the most reliable technique to hide content, but it accommodates block search results. Robots.txt files help your site to crawl certain pages, but if you disallow them, then it can be dangerous for a branded solution. However robots.txt file is very beneficial in certain situations like:

- Precluding duplicate content from emerging in the search result;

- Helps to keep certain things private;

- Keep internal pages from resulting in the public search results;

- Stipulate the situation of sitemaps;

- Restrict search engines from indexing specific pages on the website;

- Prevent your server from being overloaded when multiple contents are crawled at a specific time.

If you don’t have specific areas where you need a user agent, you can avoid paying attention to the robots.txt file. But your site needs user agents; then, you need to focus on creating and managing robots.txt files.

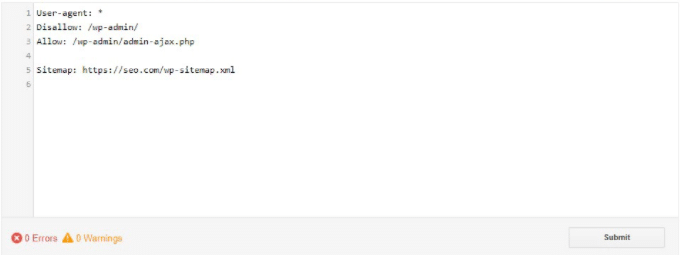

Steps to Follow for Creating Robots.txt for Your Website

If you are using WordPress for your ride-hailing solution, it automatically provides you with a robots.txt file. You don’t have to lift it; you can directly handle it on your WordPress site. You can test your robots.txt file, and as the file is virtual, you can edit it. However, it is easier for you to handle the robots.txt file. However, you can create or handle robots.txt file using a few of the simple steps, this includes:

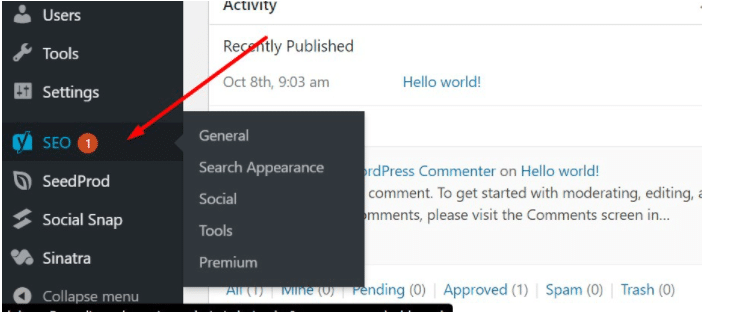

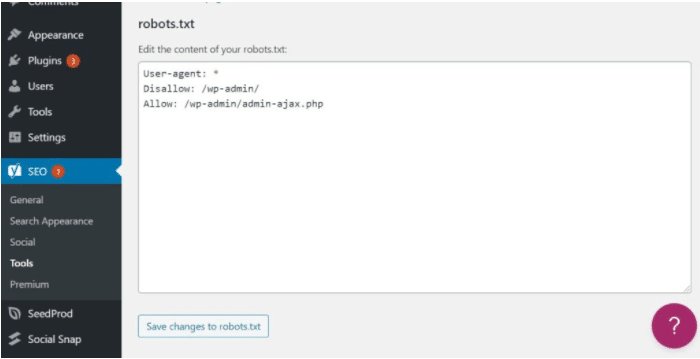

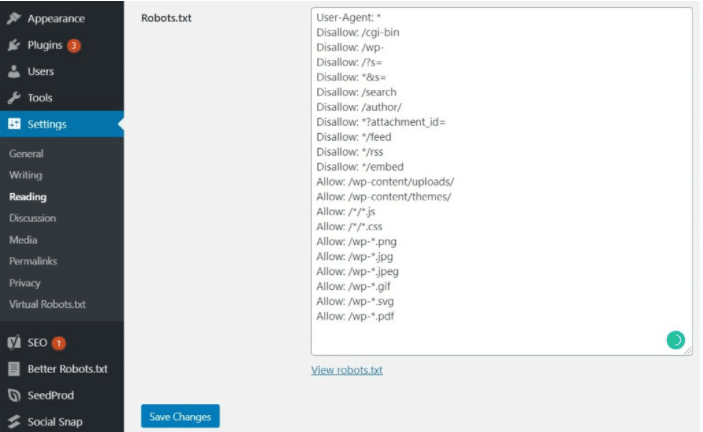

Method 1: Creating Robots.txt Using Yoast

Step 1. Install the Plugin

You can install the Yoast plugin to handle everything efficiently. Install an active SEO plugin of your choice if you haven’t done it yet.

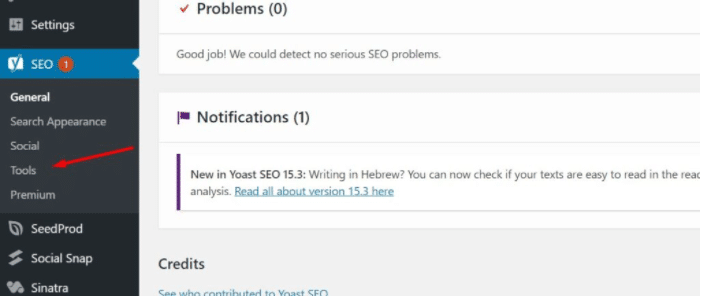

Step 2. Create the Robots.txt File

Once you install and activate the plugin, it’s time to get into action. Click on the Tools option and then click on file editor to make the essential changes you require. If you don’t have a robots.txt file, then you can create it using this tool.

Here you can see the file that you have built along with all the directives. There are few directives that the SEO plugin provides you by default while you need to build some essential ones.

If you want to add more directives to the robots.txt file, you can easily use the plugin. Once you finish all the setup, click on “Save” to approve the changes. Type your domain name along with “/robots.txt.” For example, if your ride-hailing brand domain name is “Smooth Rides”, you need to type https://www.smoothrides.com/robots.txt.

Also, make sure to include sitemap URL in your robots.txt, making it easier to have a glance at your branded website whenever you want. For example, if your taxi-hailing website has a sitemap URL as https://www.smoothrides.com/sitemap.xml, consider adding the Sitemap: https://smoothriders.com/sitemap.xml in the robots.txt file.

Another best way to build a directive to block the robot from crawling pictures on your site is to prevent it. You can easily block GoogleBot by making the changes to the robots.txt file. If you don’t know how to find a particular folder, click on an image, select an open option and check the URL.

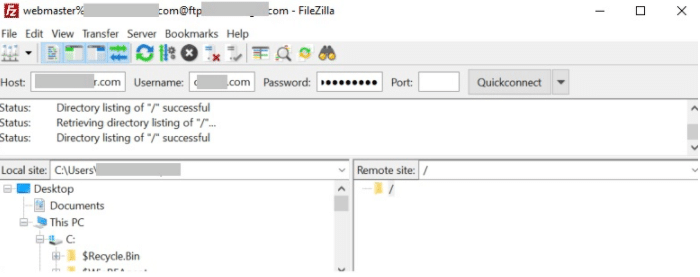

Method 2: Create Robots.txt File Using FTP

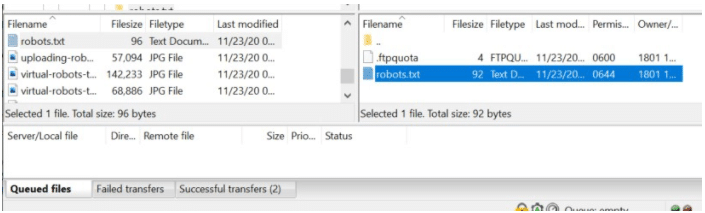

The best way to build a robots.txt file is to create it on your computer and upload it on the site. You can access the host using the FTP client. The credentials needed to log to be available in the hosting control panel.

Make sure to upload the robots.txt file to your website. It must not be in a subdirectory. Hence you can use the FTP client to log in; you will view if the file exists in your site root folder.

If you have the file, you can choose the “Edit” option and click on the correct option; once you complete all the changes, click on the “Save” option. If you don’t have the file, then you can choose to create one. You can create it using any of the Text editors of your choice and add directives to the created file.

Include all the directives of your choice and save your robots.txt file. Now upload your file using the FTP client. To check the file uploaded, use the domain name along with “/robots.txt.” in a similar way described above.

That’s all! You have successfully uploaded your robots.txt file manually to your WordPress site.

Pros & Cons of Using and Optimizing WordPress Robots.txt

You need to stay extra careful while using robots.txt files for your ride-hailing business website, as it can significantly affect your SEO result. It is pretty similar to optimizing your app on the app store.

Remember that your one mistake can make you lose the end number of patrons. Hence take extra care while using, editing, or uploading the robots.txt file to your WordPress site. Make sure to consider changing ride-hailing standards as it helps you to know where you are lacking and what you need to perform to grab desired output.

If you own a small-scale taxi-hailing business and don’t exactly know what is best for you, then it’s a better option to manage everything using the robots.txt file. However, a large-scale brand must use robots.txt files as it helps Google bots to know which pages to crawl and index in search results.

Additionally, the use of Robots.txt helps to prevent backlinks scanning, limit chances of duplicate content, and much more. Using a robots.txt file is undoubtedly beneficial if considered adequately, but it also comes with minor drawbacks. Let’s check all its advantages as well as disadvantages quickly.

Advantages:

Prevent Bugs

There are numerous disordered things when you are designing a taxi-hailing website. Hence it becomes mandatory for you to block Google bugs from indexing such content.

Insert Sitemap

Sitemap helps Google service to know everything about your site. If you own a large-scale transportation business and the number of indexes is more, there are great possibilities for Google bugs. The primary cause for this is that your site doesn’t have enough resources, making Google not index primary content.

Prevent Bug Check Backlinks

Are you wondering how you can do it? You can prevent your competitors from checking your backlinks. It is simple by blocking backlink check tools bugs in the robots.txt file. Hence robots.txt file is handy, especially when you don’t want to let your competitors know your marketing and other promotional strategies.

Avert Harmful Bugs

Are you looking to secure your WordPress site from hackers and want to avoid harmful bugs? Then using the robots.txt file is the best option to do the same. However, it also helps to prevent different types of harmful bugs.

Hinder Sensitive Folders

Robots.txt file helps you block sensitive folders, helping you eliminate bug search from indexing the content. It also helps to prevent hackers from using critical information.

Disadvantages:

Reduce the Difficulty of Attackers

Each good thing comes with drawbacks! and robots.txt is not the exception. It provides hackers with the location of your site structure and private data. The aspect is not worrying if you configure your WEB server security.

Need Extra Care

As stated above, you need to stay extra careful while using the robots.txt file, as your single mistake can cost you much higher than your imagination. Even a tiny error can make a search engine remove all your indexed data resulting in losing your rank on the search engine.

Final Note

Are you looking for WordPress SEO tips to improve your business ranking? Want control over the process of crawling and indexing your branded solution page? If yes, then this guide on robosts.txt will help you. You can perform all the essential tasks related to SEO through the robots.txt file.

Robots.txt is a text file that instructs “Google bots” which pages to crawl and index and which one to avoid. The file provides you with complete control over how search engines check your site. When you perform the process perfectly then, it provides you with incredible SEO results. The guide might help you quickly glance over how Robots.txt works and the perfect way to create and use it to improve your online presence. What are you waiting for? Start optimizing WordPress robots.txt!

Leave a Reply